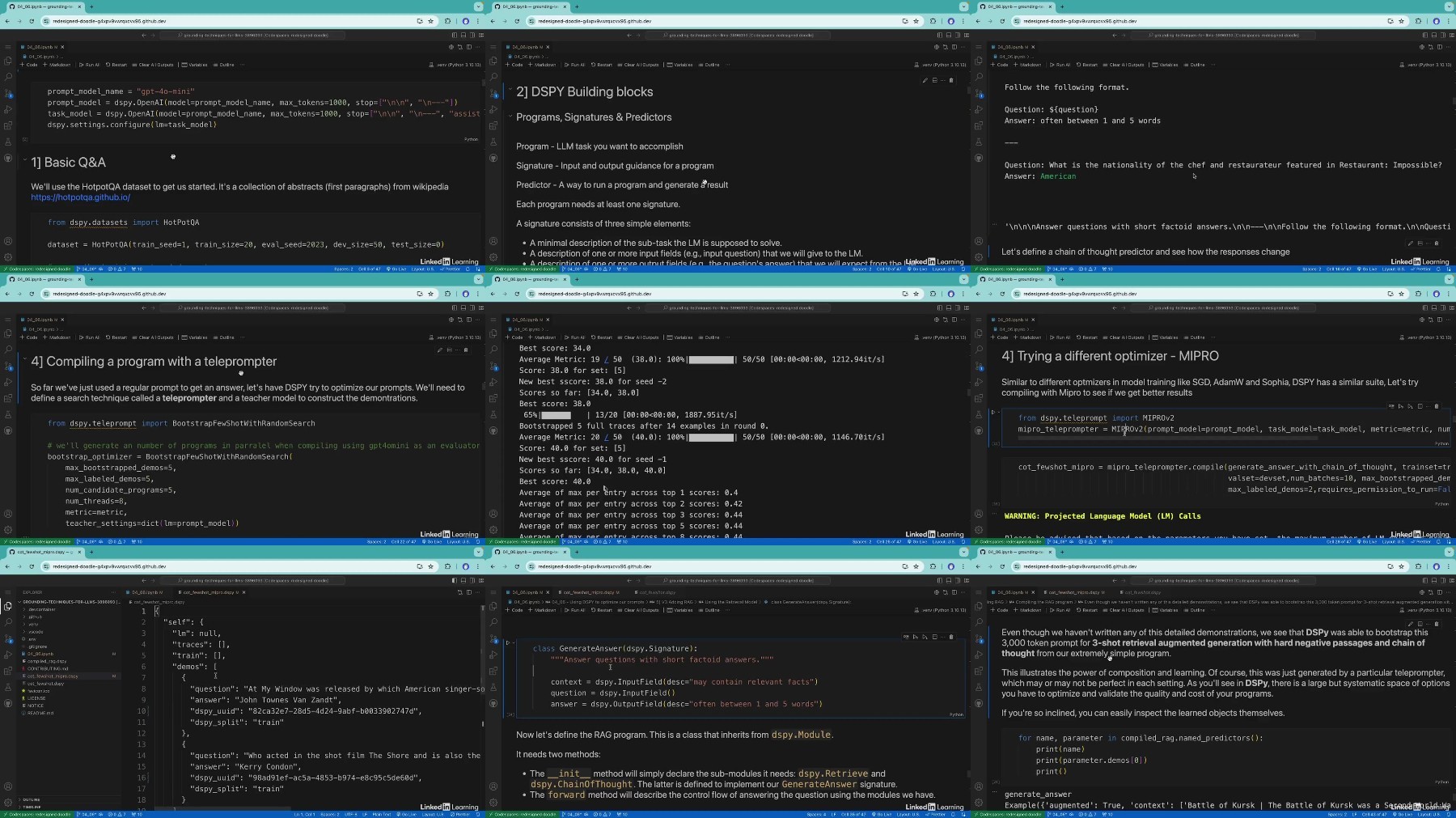

Linkedin - Grounding Techniques for LLMs

433.27 MB | 00:15:27 | mp4 | 1280X720 | 16:9

Genre:eLearning |

Language:

English

Files Included :

01 - Understanding grounding techniques for LLMs (2.48 MB)

02 - Setting up your LLM environment (14.48 MB)

01 - What is a hallucination (1.7 MB)

02 - Hallucination examples (1.95 MB)

03 - Comparing hallucinations across LLMs (7.93 MB)

04 - Dangers of hallucinations (6.95 MB)

05 - Challenge Finding a hallucination (893.54 KB)

06 - Solution Finding a hallucination (6.22 MB)

01 - Training LLMs on time-sensitive data (4.42 MB)

02 - Poorly curated training data (6.41 MB)

03 - Faithfulness and context (9.39 MB)

04 - Ambiguous responses (5.56 MB)

05 - Incorrect output structure (5.16 MB)

06 - Declining to respond (10.38 MB)

07 - Fine-tuning hallucinations (8.25 MB)

08 - LLM sampling techniques and adjustments (10.76 MB)

09 - Bad citations (4.28 MB)

10 - Incomplete information extraction (8.24 MB)

01 - Few-shot learning (5.06 MB)

02 - Chain of thought reasoning (7.49 MB)

03 - Structured templates (4.35 MB)

04 - Retrieval-augmented generation (6.92 MB)

05 - Updating LLM model versions (10.12 MB)

06 - Model fine-tuning for mitigating hallucinations (24.23 MB)

07 - Orchestrating workflows through model routing (18.26 MB)

08 - Challenge Automating ecommerce reviews with LLMs (3.28 MB)

09 - Solution Automating ecommerce reviews with LLMs (8.85 MB)

01 - Creating LLM evaluation pipelines (8.48 MB)

02 - LLM self-assessment pipelines (19.87 MB)

03 - Human-in-the-loop systems (15.14 MB)

04 - Specialized models for hallucination detection (24.38 MB)

05 - Building an evaluation dataset (12.84 MB)

06 - Optimizing prompts with DSPY (48.75 MB)

07 - Optimizing hallucination detections with DSPY (19.31 MB)

08 - Real-world LLM user testing (16.48 MB)

09 - Challenge A more well-rounded AI trivia agent (1.36 MB)

10 - Solution A more well-rounded AI trivia agent (8.42 MB)

01 - Ragas Evaluation paper (9.2 MB)

02 - Hallucinations in large multilingual translation models (15.26 MB)

03 - Do LLMs know what they don't know (10.33 MB)

04 - Set the Clock LLM temporal fine-tuning (8.85 MB)

05 - Review of hallucination papers (8.93 MB)

01 - Continue your practice of grounding techniques for LLMs (1.4 MB)

[center]

Screenshot

[/center]

Коментарии

Информация

Посетители, находящиеся в группе Гости, не могут оставлять комментарии к данной публикации.